Each year in December, senior Human-Computer Interaction researchers meet to discuss the articles submitted to the Conference on Human Factors in Computing Systems (or in short CHI). CHI is the most important venue for research on Human-Computer Interaction and covers a broad range of research from understanding people, via novel interaction techniques to visualization. This year, over 300 researchers came to Montreal and discussed the articles submitted to CHI 2018. With Harald Reiterer and me, two associate chairs from Konstanz and Stuttgart participated in the meeting. CHI only accepts about 25% of the submissions after a rigorous peer review process. With 16 accepted publications, the groups participating in SFB-TRR 161 from Konstanz, Tübingen, and Stuttgart have been very successful and are happy about how well their submissions have been received. All submissions are conditionally accepted which means that the authors have to incorporate the feedback from the reviewers before the articles will be accepted. The technical papers will be presented in April at the conference which will also take place in Montreal.

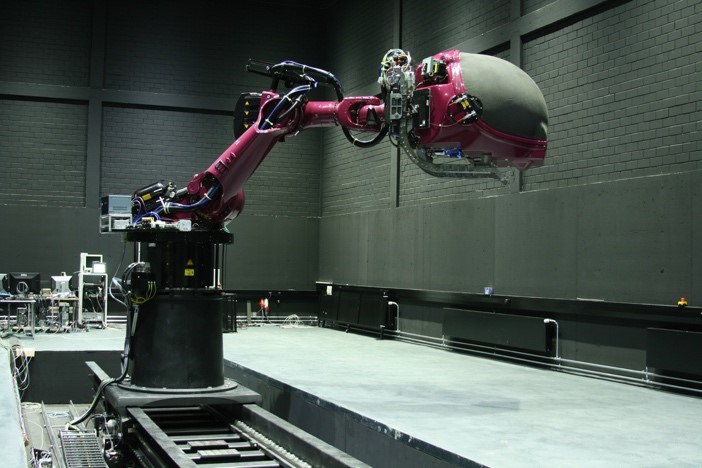

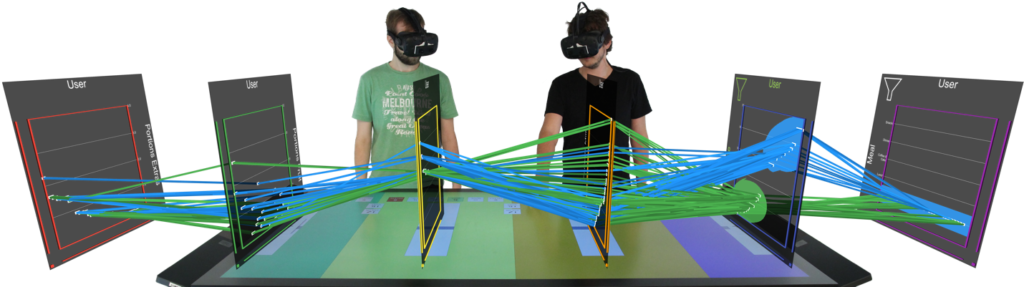

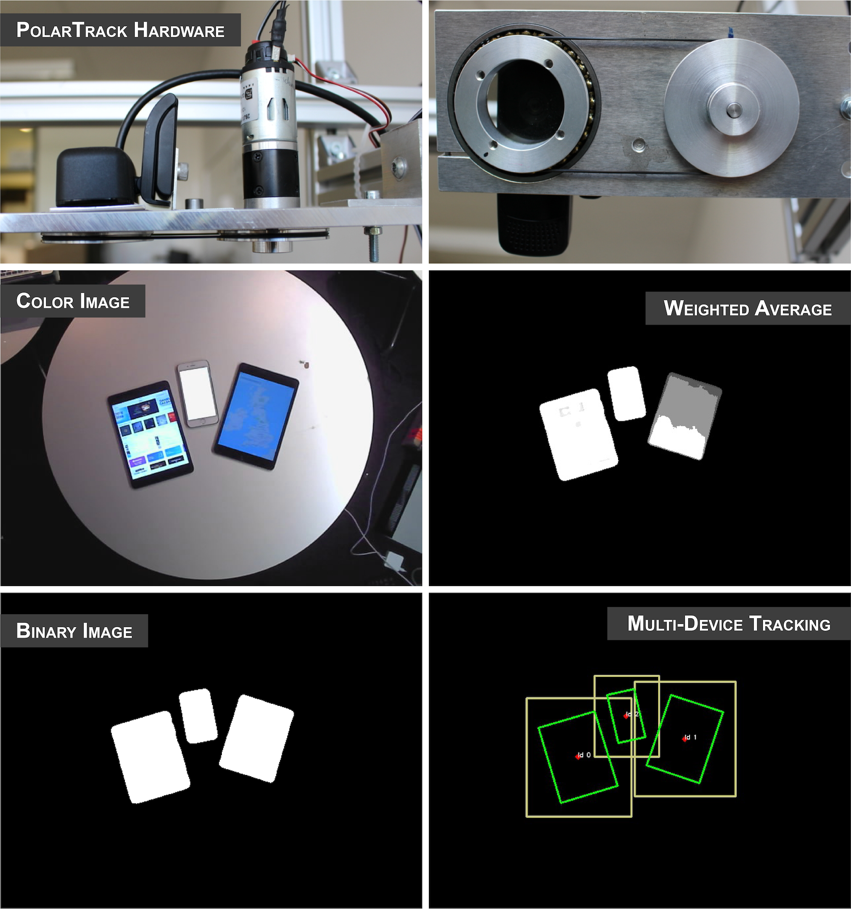

The accepted articles include work that develops a better understanding of how people use technology. The work from Tübingen addressed how humans perform in highly-realistic visually-immersive environments, namely simulators for automated cars [1] and trucks [7]. With researchers from Oldenburg, Heinrich Bülthoff and Lewis Chuang show that real motion influences our readiness to take over control of highly automated vehicles [1]. With Scania, Christiane Glatz et al. show that verbal commands and auditory icons, designed for supporting task-management in truck drivers, favor different information processes in the brain [7]. Huy Viet Le et al. will present work in which they investigate the fingers’ range and the comfortable area for one-handed smartphone interaction [2]. Rufat Rzayev et al. studied reading on head-mounted displays and investigated the effects of text position, presentation type and walking [3]. Together with researchers from Stuttgart and Saarbrücken, Mohamed Khamis from LMU Munich conducted a week-long study to reveal the face and eye visibility in front-facing cameras of Smartphones to identify challenges, potentials, and applications for mobile interfaces that use face and eye tracking [4]. Miriam Greis et al. look at uncertainty visualizations to improve humans’ choice of internal models for information aggregation [5]. Thomas Kosch, who recently moved to LMU Munich, together Paweł Wozniak from Stuttgart, Erin Brady from Purdue University Indianapolis, and Albrecht Schmidt now also in Munich investigate the design requirements of smart kitchens for people with cognitive impairments [15].

Here is a list of papers accepted for CHI 2018:

[1] Shadan Sadeghian Borojeni, Susanne Boll, Wilko Heuten, Heinrich Bülthoff, Lewis Chuang: Feel the Movement: Real Motion Influences Responses to Take-over Requests in Highly Automated Vehicles, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[2] Huy Viet Le, Sven Mayer, Patrick Bader, Niels Henze: Fingers’ Range and Comfortable Area for One-Handed Smartphone Interaction Beyond the Touchscreen: Evaluating the Disruptiveness of Mobile Interactions: A Mixed-Method Approach, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[3] Rufat Rzayev, Paweł Woźniak, Tilman Dingler, Niels Henze: Reading on HMDs: The Effect of Text Position, Presentation Type and Walking, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[4] Mohamed Khamis, Anita Baier, Niels Henze, Florian Alt, Andreas Bulling: Understanding Face and Eye Visibility in Front-Facing Cameras of Smartphones used in the Wild, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[5] Miriam Greis, Aditi Joshi, Ken Singer, Tonja Machulla und Albrecht Schmidt: Uncertainty Visualization Improves Humans’ Choice of Internal Models for Information Aggregation, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[6] Simon Butscher, Sebastian Hubenschmid, Jens Müller, Johannes Fuchs, Harald Reiterer: Cluster, Trends, and Outliers: How Immersive Technologies can Facilitate Collaborative Analysis of Multidimensional, Heath-Related Data. In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[7] Christiane Glatz, Stas Krupenia, Heinrich Bülthoff, Lewis Chuang: Use the Right Sound for the Right Job: Verbal Commands and Auditory Icons for a Task-Management System Favor Different Information Processes in the Brain, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[8] Sven Mayer, Valentin Schwind, Robin Schweigert, Niels Henze: The Effect of Offset Correction and Cursor on Mid-Air Pointing in Real and Virtual Environments, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[9] Huy Viet Le, Thomas Kosch, Patrick Bader, Sven Mayer, Niels Henze: PalmTouch: Using the Palm as an Additional Input Modality on Commodity Smartphones, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[10] Roman Rädle, Hans-Christian Jetter, Jonathan Fischer, Inti Gabriel, Clemens N. Klokmose, Harald Reiterer, Christian Holz, PolarTrack: Optical Outside-In Device Tracking that Exploits Display Polarization. In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[11] Sven Mayer, Lars Lischke, Jens Emil Grønbæk, Zhanna Sarsenbayeva, Jonas Vogelsang, Paweł Woźniak, Niels Henze, Giulio Jacucci: Pac-Many: Movement Behaviour when Playing Collaborative and Competitive Games on Large Displays, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[12] Pascal Knierim, Valentin Schwind, Anna Feit, Florian Nieuwenhuizen, Niels Henze: Physical Keyboards in Virtual Reality: Analysis of Typing Performance and Effects of Avatar Hands, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[13] Sven Mayer, Lars Lischke, Paweł Wozniak, Niels Henze: Evaluating the Disruptiveness of Mobile Interactions: A Mixed-Method Approach, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[14] Tilman Dingler, Rufat Rzayev, Ali Sahami, Niels Henze: Designing Consistent Gestures Across Device Types: Eliciting RSVP Controls for Phone, Watch, and Glasses, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[15] Thomas Kosch, Mariam Hassib, Paweł Woźniak, Daniel Buschek, Florian Alt: Your Eyes Tell: Leveraging Smooth Pursuit for Assessing Cognitive Workload, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.

[16] Thomas Kosch, Paweł Wozniak, Erin Brady, Albrecht Schmidt: Can I Assist You?: Investigating Design Requirements of Smart Kitchens for People with Cognitive Impairments, In: Proceedings of the Conference on Human Factors in Computing Systems, 2018.